Scope of the Problem

In content filtering, false positives are when AI systems accidentally flag as inappropriate or not protocol-compliant something that is actually a valid post. This is a problem that impacts everyone, from users who feel their voices are being silenced to researchers building a safer world online to businesses who see their platforms being misused. According to the industry, a traditional AI content filtering tool has a 10–15% false positive rate.

The next frontier: making AI more accurate with better algorithms

Developers are working to subdue the curse of false positives, by building increasingly complex algorithms into AI systems. It is comprised of deep learning models that can understand context better than previous technologies. Training these models on wider and more diverse datasets helps the AI tell apart what is really dangerous, from content that is safe but has features occasionally cited as violations. Leading-edge experts said they have been able to bring false positive rates down by 30% in proof-of-concepts.

Layered Filtering Approaches

Multi-stage engineered solutions for content filtering incorporate the processing of content by multiple layers of analysis, where AI (usually Machine Learning) would be used in initial stages of analysis to tag content, with subsequent layers (some possibly AI systems as well) fine tuning the decision by making the final decision based on further analysis of the tagged (or no-tagged) content using different criteria or in some cases deploying more advanced models or kernal functions. This approach reduces the Fixed FNR Property the danger that the final decision might be influenced by false positive cases while taking advantage of biased datasets. Companies who deploy layered filtering see an improvement in accuracy suppressing false positives by as much as 20% without detriment in detecting true inappropriate content.

Incorporating Human Oversight

Therefore, even with the advancements in AI, the need for human oversight is paramount for us to deal well with false positives. When the decision goes to AI, human reviewers are crucial for enforcing the decisions, as their feedback will help train the AI to make better decisions and reduce errors. The best tech platforms use hybrid AI and human review teams to moderate flagged content to help ensure that decisions are fair and account for its often messy human context that AI alone is prone to overlook.

Practice: Feedback Loops with Lessons Learned

These AI systems come with feedback loops, and they gradually learn from their failures. The system is fed information about the error when the error is flagged as a false positive, allowing it to adjust the parameters that caused the error to prevent further false positives. AI systems need to be able to continuously learn in order to make sure that they can keep up with the rapid change in contents and slang which are hard to track with the most static filtering model.

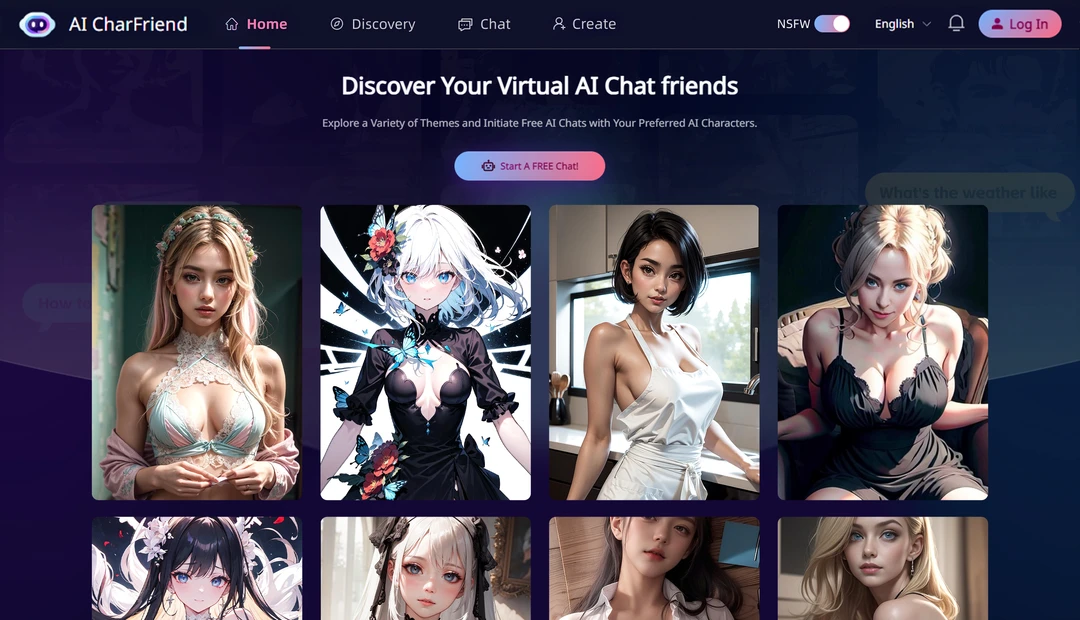

How NSFW AI Can Help Mitigate False Positives

For example, in the particular space of NSFW (Not Safe For Work) content, AI is vital in keeping the line between harmful and innocent content drawn. NSFW AI is specifically made for soft, mistake-prone content. By shifting focus to a more nuanced understanding, in addition to contextual awareness, the nsfw ai systems significantly decrease the ratio of false positives when dealing with approproprially gray content.

By using these sophisticated customs techniques and strategies, the AI becomes gradually more capable of accurately filtering ridiculous material before waning the digital space with the lights on yet be sure people would be there to express freely.